Query Fan-Out: The Future of AI-Driven Search

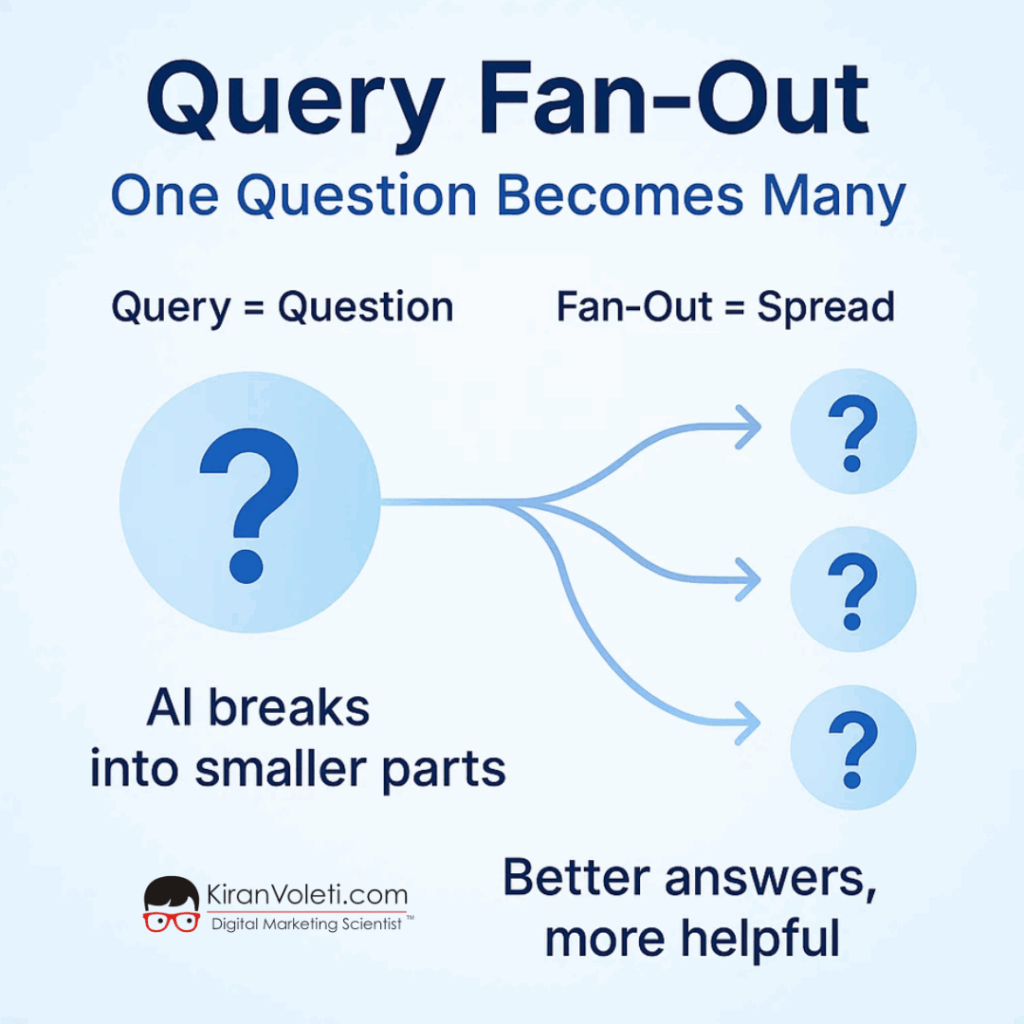

Query Fan-Out is a technique AI-powered search engines use to break a single user query into multiple related sub-queries. This allows for deeper contextual understanding and improved content retrieval. This article explains how it works, why it matters, and how to optimize your content for AI-first search environments.

What Is Query Fan-Out?

Query Fan-Out is when an AI search engine expands a single query into several semantically related sub-queries to generate a more complete response. Instead of matching exact keywords, the engine interprets user intent and retrieves content across various dimensions.

This approach powers AI-enhanced search tools such as Google’s AI Mode, Perplexity.ai, ChatGPT Search, and You.com. These platforms rely on large language models (LLMs) and retrieval-augmented generation (RAG) to structure and deliver responses.

Query Fan-Out is the process of expanding a user’s initial query into multiple sub-queries or related questions. These sub-queries are executed sequentially or parallel to gather comprehensive, relevant results. This technique supports advanced information retrieval, especially in AI-powered environments like Google’s Search Generative Experience or OpenAI’s ChatGPT with browsing capabilities.

Google AI Mode is a chat-style, generative search experience powered by Gemini 2.5. Instead of displaying traditional search result links, it provides direct, synthesized answers.

It uses a technique called “Query Fan-Out” to break a single search into multiple related sub-questions, generating a broader response.

Initially launched in the United States, it is expected to roll out globally.

Changes in Search Behavior with AI Mode

- Users remain on Google: Answers are delivered within the interface, reducing outbound clicks.

- Website traffic declines: Fewer clicks mean lower referral traffic for publishers and creators.

- SEO evolution: Traditional click-through-focused strategies are losing effectiveness.

Concerns Among SEOs and Publishers about AI Mode

- A recent poll shows 45% of SEO professionals are “scared out of their minds.”

- AI Mode traffic appears as “Direct” due to noreferrer tags, complicating attribution.

- Google Search Console will show AI Mode data, but with limited detail.

- Publishers argue AI Mode uses content without sending traffic back to the source.

For Marketers and Advertisers

- Google is testing ads within AI Mode and AI Overviews.

- Ad placements are driven by context, intent, query type, and location.

- AI Mode includes shopping features such as virtual try-on, personalized suggestions, and product comparisons.

- This mode will likely evolve into a high-performance ad channel with more precise targeting than traditional search ads.

Key Technical and Functional Shifts about AI Mode

| Feature | Traditional Search | AI Mode |

|---|---|---|

| Search Experience | Link-based results | AI-generated conversational summaries |

| SEO Measurement | Full visibility in GSC | Limited and ambiguous attribution |

| Publisher Traffic | Referral-based | Significantly reduced click-throughs |

| Interface | Static results page | Interactive, chat-style format |

| Ads | Keyword and bid-based | Contextual within AI responses |

Strategic Actions for Businesses and SEOs about AI Mode

- Re-optimize content for AI visibility by ensuring indexing, structured data, and strong entity associations.

- Focus on brand visibility. Being referenced matters more than just ranking.

- Explore Generative Engine Optimization (GEO), a new approach to improve inclusion in AI-generated answers.

- Adapt content strategies across blogs, affiliate sites, and newsletters as external referral traffic declines.

Outlook and Industry Response about AI Mode

Google is promoting AI Mode as the future of search, signaling a shift from the open web to a closed answer-generation model.

News outlets and digital rights groups criticize the model for devaluing original content and reducing access to independent platforms.

As Google expands AI Mode worldwide, legal, ethical, and competitive challenges are expected to grow.

Fundamentals of Query Fan-Out

Definition of Query Fan-Out

Query Fan-Out transforms a single input into a set of related or alternate queries to better capture the user’s intent and improve search accuracy.

Query Expansion

This technique adds synonyms, variations, or related terms to the original query. For example, “laptop deals” may expand to include “notebook offers” or “top laptop discounts.”

Query Refinement

Refinement narrows or clarifies the search after initial results. AI systems use user interactions, click behavior, or detected intent to improve the query iteratively.

Information Retrieval Context

Keyword-Based Search

Traditional systems rely on exact keyword matches. Query Fan-Out improves these by introducing semantically similar terms, increasing the likelihood of retrieving relevant results.

Semantic Search

Semantic search interprets the meaning behind queries. Query Fan-Out supports this by generating multiple semantically linked queries.

Techniques for Query Fan-Out

Boolean Operators

These logical operators structure fan-out queries:

- AND: Narrows results (“data AND privacy”)

- OR: Broadens results (“autonomous OR self-driving”)

- NOT: Excludes specific terms (“cookies NOT chocolate”)

Phrase Matching

- Exact Phrase Search: Uses quotes to match precise phrases (“digital marketing”)

- Proximity Search: Finds terms within a specific word distance (“machine NEAR learning”)

Query Suggestion

- Autocomplete: Predicts user input as they type, often using prior data.

- Related Queries: Suggests alternative queries based on common search patterns.

Applications of Query Fan-Out

Web Search Engines

- Google Search: Uses fan-out in AI snippets and “People also ask” features.

- Bing Search: Employs semantic clusters and intelligent answers.

Enterprise Search

- Intranet Search: Uses fan-out to retrieve internal documents more effectively.

- Document Management: Improves classification and retrieval through expanded queries.

E-Commerce Search

- Product Search: Expands queries to include brand, price, or specifications.

- Faceted Navigation: Applies filters such as size or ratings as query dimensions.

Evaluation Metrics

Precision

Measures how many retrieved documents are relevant. Broad fan-out may reduce precision by retrieving unrelated results.

Recall

Measures how many relevant documents are retrieved. Fan-out often improves recall by covering more variations of the query.

F1-Score

This is the harmonic mean of precision and recall. An effective fan-out strategy balances both.

Challenges

Query Ambiguity

- Polysemy: Some words have multiple meanings (e.g., “bank”).

- Context Dependency: The meaning of terms like “Apple” can change based on context.

Information Overload

- Result Ranking: Too many results may reduce overall quality.

- Filtering: Requires practical ranking algorithms to prioritize beneficial results.

Research and Trends

Natural Language Processing (NLP)

- Query Understanding: NLP models like BERT interpret intent more accurately.

- Query Reformulation: AI rephrases queries to better align with indexed content.

Machine Learning

- Learning to Rank (LTR): Models rank fan-out results by estimated relevance.

- Query Recommendation: Predicts the following best queries based on historical data.

Query Fan-Out Techniques

| Technique | Purpose | Example |

|---|---|---|

| Query Expansion | Broaden semantic scope | “laptop” → “notebook” |

| Boolean Operators | Combine or exclude terms | “AI AND ethics” |

| Phrase Matching | Match precise or nearby terms | “cloud computing” |

| Query Suggestion | Offer alternative inputs | Autocomplete, Related Queries |

Query Fan-Out Evaluation Metrics

| Metric | Goal | Impact of Fan-Out |

| Precision | Retrieve only relevant items | May decrease |

| Recall | Retrieve all relevant items | Often increases |

| F1-Score | Balance precision and recall | Target improvement |

Why Query Fan-Out Matters in 2025

How Search Behavior Is Evolving

Users increasingly expect search engines to provide synthesized, context-aware answers, not just links. For example, when someone searches:

“How can AI reduce urban traffic congestion?”

The system may expand the query into:

- “AI in traffic light optimization”

- “Mobility data in smart cities”

- “Real-time traffic prediction models”

- “Urban planning case studies”

This expansion enables more detailed and targeted results.

Impact on SEO

Keyword-based SEO is less effective in this model. Instead, content should:

- Be structured into thematic topic clusters

- Support entity-based metadata

- Be compatible with vector-based retrieval systems

How Query Fan-Out Works

Technical Process

| Step | Description |

|---|---|

| 1. Intent Disambiguation | The LLM identifies the core meaning of the query. |

| 2. Sub-Query Expansion | It generates related questions using embeddings and knowledge graphs. |

| 3. Parallel Retrieval | Sub-queries search across neural indexes like FAISS or Vespa. |

| 4. Fusion and Ranking | Relevant content fragments are selected, ranked, and compiled. |

| 5. Answer Synthesis | The model generates a structured and coherent response. |

Benefits of Query Fan-Out

For Users

- Access to comprehensive, multifaceted answers

- Improved understanding of complex topics

- Results aligned with individual intent

For Content Creators and SEOs

- Opportunities to rank through content sections, not just full pages

- Encourages structured, interconnected content strategies

- Aligns with LLM-powered indexing methods

Structuring Content for AI Search

Use Topic Clusters

Break broader topics into focused, interlinked subtopics:

- “AI for customer segmentation”

- “Predictive analytics in email campaigns”

- “Chatbots and customer experience”

Apply Contextual Vocabulary

Use terms aligned with semantic models, such as:

- “Predictive personalization”

- “LLM-based targeting”

- “Zero-party data strategy”

Avoid repeating generic terms like “AI marketing” without context.

Support Retrieval-Augmented Generation (RAG)

Format content for AI retrieval tools:

- Use clear answers to specific questions

- Include structured elements like lists and tables

- Add FAQs based on actual search queries

Preparing for Neural Indexing

Neural search engines operate on vector similarity, not keyword matches. Your content should prioritize:

- Clarity: Define specific, unambiguous topics

- Depth: Include diverse examples, statistics, and comparisons

- Structure: Use clean headers, proper HTML semantics, and schema markup

Example

Replace vague titles like: “AI and Business” With specific titles like: “How AI Chatbots Improve Customer Retention for Small Businesses”

Query Fan-Out Example

Original Query

“Benefits of solar panels in smart cities”

AI-Generated Sub-Queries

- “How solar panels improve energy efficiency”

- “Solar integration with smart grids”

- “Examples of solar use in city infrastructure”

- “Economic return on solar in urban areas”

Retrieved Sources

- IEA study on decentralized energy

- Report on Amsterdam’s solar initiatives

- Indian policy documents on renewable energy

AI Summary Output

“Solar panels in smart cities enhance energy independence, lower grid pressure, and support local power generation. For example, Amsterdam’s rooftop solar network saves over 10 million kWh annually.”

Steps for Content Creators and SEO Professionals

| Step | Task |

|---|---|

| 1 | Use semantic H2/H3 headers aligned with user questions |

| 2 | Add structured data (FAQ, Article, Breadcrumb) |

| 3 | Interlink related topics to form a content graph |

| 4 | Prioritize content depth and relevance over keyword count |

| 5 | Include first-party data or unique observations |

| 6 | Use proper schema.org markup |

| 7 | Analyze visibility in AI-native platforms |

Closing Thoughts on the Future of Search

Query Fan-Out signals a shift in how search engines interpret and display information. Content must now be precise, structured, and designed for intelligent systems to analyze.

To adapt:

- Emphasize semantic relevance and topic clarity

- Create structured, linkable content segments

- Design with retrieval-augmented generation in mind

Writers and marketers who embrace these practices will remain discoverable and relevant in a rapidly evolving AI-driven search environment.

- Apply fan-out strategies to boost search recall and relevance.

- Combine semantic understanding with keyword logic.

- Use NLP and machine learning for smarter query reformulation.

- Evaluate performance using F1-score, not just precision.

- Employ intelligent ranking to manage large result sets.

FAQs About Query Fan-Out

How does Query Fan-Out differ from traditional search?

Traditional search ranks pages by keyword relevance. Query Fan-Out creates and ranks sub-questions, retrieving the most relevant pieces of content to form a synthesized answer.

Is Query Fan-Out better for long-form queries?

Yes, especially for complex queries that cover multiple dimensions of user intent.

How can I check if my content works with Query Fan-Out?

Use tools like Perplexity.ai, You.com, or Bing AI to test whether your content is retrieved, how it’s summarized, and which segments are highlighted.

What is the purpose of Query Fan-Out?

It improves search quality by generating multiple related queries to match user intent.

How does it improve AI search?

It creates broader, more accurate context paths, helping AI models return better results.

Is Query Fan-Out the same as Query Expansion?

Query expansion is part of fan-out. Fan-out includes structural changes, refinement, and related suggestions.